Title: Docker commands Cheat Sheet by angelceed - Cheatography.com Created Date: 4845Z. Docker cheat sheet Introduction. Docker is a tool for bundling together applications and their dependencies into images that can than be run as containers on many different types of computers. Docker and other containerization tools are useful to scientists because. This tutorial brings you a docker command cheat list in a printable A4 size and also in a pdf format for your quick reference. Please keep us posted if you need us to add more commands. Commands are categorized in 7 sections as listed below. Contents of Docker Cheat sheet. Starting and Stopping; 1.3. Docker Command Essentials. The idea of docker essentials is to summarize all the most use full docker commands. Docker status related command. To check that the docker is working correctly. You need at least to have server detected. $ docker info Container. Container related command. To create and run a container. Docker’s purpose is to build and manage compute images and to launch them in a container. So, the most useful commands do and expose this information. Here’s a cheat sheet on the top Docker commands to know and use. Images and containers. The docker command line interface follows this pattern: docker COMMAND docker images docker container.

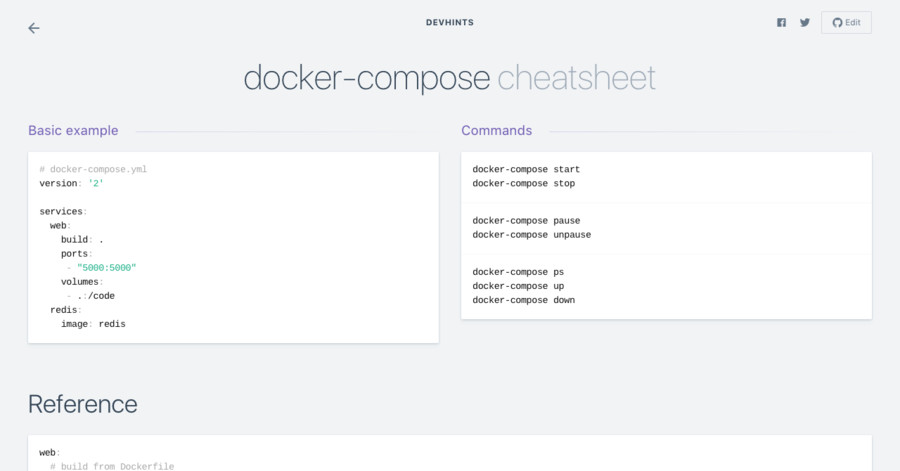

- Docker Swarm Commands Cheat Sheet

- Dockerfile Commands Cheat Sheet

- Docker Compose Commands Cheat Sheet

- Dockerfile Commands

- Docker Commands The Ultimate Cheat Sheet

Introduction

Docker is a tool for bundling together applications and their dependencies intoimages that can than be run as containers on many different types of computers.

Docker and other containerization tools are useful to scientists because:

- It greatly simplifies distribution and installation of complex work flows thathave many interacting components.

- It reduces the friction of moving analyses around. You may, for example, wantto explore a tool or dataset on your own laptop and then move that analysisenvironment to a cluster. Rather than spend time configuring your laptop and thenspend time configuring your server, you can run the analyses within Docker onboth.

- It is a convenient way to share your analyses and to help make them transparentand reproducible.

- It makes a lot of technical advances and investments made in industryaccessible to scientists.

- Docker instances launch very quickly and Docker itself has almost no overhead.This makes it a very convenient way to create clean analysis environments.

There are some potential down sides:

- You have to learn a bit about Docker, in addition to everything else you arealready doing.

- Docker wasn't designed to run on a large shared computer with many users, likean academic research cluster. This means that there are somesecurity concernsthat have led most academic research computer centers to not provide support for running Dockercontainers on their clusters (in fact, I'm not aware of any that do). But you can run it on your owncomputer and on a variety of cloud computing services. If you like containers but docker is not agreat fit for your own research computing environment, you should also considersingularity.

- Docker creates another layer of abstraction between you and your analyses. It requires, forexample, extra steps to get your files in and out of the analysis environment.

There are a variety of great tutorials out there on docker already. These include:

The purpose of this document is to present a streamlined introduction for common tasks inour lab, and as a quick reference for those common tasks so we knowwhere to find them and can execute them consistently.

Some Docker fundamentals

- A Docker Image is the snapshot that containers are launched from.They are roughly equivalent to a virtual machine image. Images areread only files.

- A Docker Container is a running instance based on one or moreimages. Contains an app and everything it needs to run. Containersare ephemeral, all changes are lost when they are terminated.

- A Docker Registry is where images are stored. A registry can bepublic (e.g. DockerHub) oryour own. One registry can contain multiple repos.

- Docker has a sever/client architecture. The

dockercommand isthe command line client. Kitematic is the GUI client. Servers andclients can be on the same or different machines.

Getting set up

Head to http://docker.com, click on 'Get Docker', and follow the instructions.Once it is running, go to the Docker menu > Preferences... > Advanced andadjust the computing resources dedicated to each instance.

To test your Docker installation, run:

Docker commands

Here are some of the commands you will use most often with Docker.

Running a docker container

docker run is the most important command. Here are basic use cases andoptions.

docker run [image]creates container from the specified image and runs it.If the image is not already cached locally, it will be downloaded fromDockerHub.- By default, the container runs then kicks back out to the shell when itis done.

- If you want to interact with container, you need to launch with

docker run -it [image]. Depending on the configuration of the container, youmay need to also specify that you want to run a shell, egdocker run -it [image] bash - Containers only runs as long as the

docker runprocess runs. Once youexit from a container, it is gone along with all changes. docker run -d [image]detaches the container, i.e. puts it in backgroundwhen it is launched.

Monitoring running containers

docker psshows running containers. Each container can be referredto by name or IDdocker logsshows the output of docker containersdocker statsshows the resource utilization (CPU, memory, etc...) of running containers

Working with running containers

docker stopstops a running container. For example,docker stop 8a6f2b255457where8a6f2b255457is the container id you got from runningdocker psctrl+p+qallows you to get out of container without exiting itdocker exec -it [id] bashopens an interactivebashshell (if it exists in the container) on a running containerdocker commit [id] -m 'message about commit...'creates an image from a running container. You can then move this image to another docker host to run it somewhere else, use it as a checkpoint on a long analysis if you think you might want to go back to this point, or share the image with others.

Getting data in and out of docker containers

A Docker container has its own file system. Be default, it cannot read orwrite to host files or access hos services (like databases). There are afew ways to get data in and out of a container.

- To mount host files within the Docker container so thatthey can be modified and read, run the container with

docker run -v. - To open ports on the Docker container for incoming web, database, andother access, run the container with

docker run -p. This allows you,for example, to serve web sites from the container. - Use the

docker cpcommand on the host to get files in and out of thecontainer. First rundocker psto get its Container ID, then run e.g.docker cp a14d91a64fea:/root/tmp Downloads/to copy the/root/tmpdirectory from the container to theDownloads/directory of the host. - From within the container, use standard network protocols and toolssuch as

git,wget, andsftto move files in and out of thecontainer.

Managing images and containers

As you use docker more you may need to clean things up once and a while to optimize resource utilization.

docker ps -ashows all containers, including those that are running and those that are stopped. Stopped containers aren't using CPU or memory, but they do use diskspace. You can usedocker cpto get files in and out of them. This is useful if you remember something you needed after you stopped a container. It is a pain if you have a bunch of stopped containers that, like zombies, chew up your disk space.docker rmallows you to remove a container. This completely wipes it from your system.docker imagesshows all the docker images on your system.docker rmiallows you to remove images on your system. This can be useful for reclaiming disk space.

Building Docker images

There are two general ways to distribute images. You can distribute a Dockerfilethat has all the information needed to build an image. Dockerfiles provideexplicit provenance and are very small. You can also distribute actual images.These can be huge (e.g. several gigabytes) and it isn't always clear what is inthem, but they are ready to go.

Dockerfile

There is excellent documentation on Dockerfiles in theDockerfile reference, and the Dockerfile Best Practicesdocument is a great way to learn how to put this information to use.

You create a docker image from a Dockerfile with the docker build command. It takes the path to thedirectory where the Dockerfile is. Note that the Dockerfile itself always needs to be called Dockerfile.

For example, you could cd to the directory where your Dockerfile is and then run:

You can also provide a url to the Dockerfile, eg:

You can provide a github repo:

Where revision is the branch and docker is the folder within the repo that contains the Dockerfile

Using this last approach is a great way to distribute images without people even needing to clone your repostiry, they just need a single line to get and build the image.

Docker use cases

This section explains how to use Docker for a few example tasks.

Ubuntu container

It is often convenient to test analyses and tools on a clean Linuxinstallation. You could make a fresh installation of Linux on acomputer (which can take an hour or more and requires an availablecomputer), launch a fresh Linux virtual machine on your own computeror a cloud service (this often takes a few minutes), or launch aDocker container (which takes seconds).

This command launches a clean interactive Ubuntu container:

Note that this is a stripped down image that has a couple differencesfrom a default Ubuntu installation. In particular, it provides rootaccess so there is no sudo command.

Agalma

A Docker image is available for our phylogenetic and gene expression toolAgalma. Take a look at the READMEfor more information and instructions.

RStudio

Out-of-the-box

Docker is a convenient way to run RStudio if you want a clean analysisenvironment or need more computing power than is available on yourlocal computer.

Launch a RStudio container with:

Point your browser to xxx:8787, where xxx is the ip address of your instance.It will be localhost if you are running docker locally on a Mac. If youare running Docker on a cloud instance, you will get it from the cloud console.

Enter rstudio for both username and password. You now have access to a RStudioinstance running in a container!

You can use the git integration features of RStudio to get code and analyses inand out of the container.

Custom R environment

R has great package managers, but as projects grow in complexity it can be difficultto install and manage all the packages needed for an analysis. Docker can be a great wayto provision R analysis environments in an explicit, reproducible, and customizable way.In our lab we often include a Dockerfile with our R projects that builds an image with allthe dependencies needed to run the analyses.

If your analysis is in a private repository, clone the repository to the machine where youwill build the image to run the analyses. Then cd to the directory with the Dockerfile,and run:

If your Dockerfile is in a public git repository, then you can specify it with a url without cloning it, as described above.

Once you have built an image from the Dockerfile and have an image id, then you can run a containerbased on the image and use git within the container to push your worm back to the remote repository as you work.

As an example, consider the R analysis at https://github.com/caseywdunn/executable_paper .

Execute the following to build an image based on the Dockerfile on the master branch in the docker repository folder:

If all goes well this will finish with the line Successfully built [image_id], where [image_id] is the image ID for the image you just built.

Run a container based on the image you built above (substituting [image_id] for the id you got above):

The -d specifies that the container should detach, giving you back the command line on the host machine while the container runs in the background. The -p 8787:8787 maps port 8787 in the container to port 8787 on the host, which we will use to connect to the RStudio GUI with a browser in a bit.

Run the following to get the container_id for the running container (this is different from the image_id that the container is based on).

To get ready to use git in the container, you need to configure it with your email address and name. This is done by opening a shell on the container, switching to the rstudio user with su, and entering some git commands (where the John Doe bits are replaced with your name and email address):

Next point your browser to port 8787 at the appropriate ip address (eg, http://localhost:8787 if running docker on your local machine, or http://ec2-x-x-x.compute-1.amazonaws.com:8787 if running docker on an amazon ec2 instance, where ec2-x-x-x.compute-1.amazonaws is the public DNS). Sign in with username rstudio and password rstudio. This will pull up a full RStudio interface in your web browser, powered by R in the container you just made.

Select File > New Project... > Version control > git, and enter https://github.com/caseywdunn/executable_paper.git for the Repository URL. Then hit the 'Create' button.

There will now be a git menu tab on the right side of the RStudio display in the browser window, and you can select the cloned files you want to work on in the lower right side of the page. Click the mymanuscript.rmd file to open it.

Now you can work on your project. You change files, either by saving edits, running code, or knitting the the file. In this case, change some text in mymanuscript.rmd and save the changes.

Now you can commit your changes. Open the Git tab, click commit, select the Staged checkbox next to the files you have modified, enter a Commit message, and click commit. Then close the commit window that pops up.

Be sure to push your changes back to the remote repository before you destroy the container. This can also be done from the git window. Click the Push button. You will then be prompted for your username and password to push the container. You cannot actually push changes in this example, since you don't have push permission on my example repositry.

Using an RStudio container without the RStudio interface

RStudio is a great tool, but as your analyses grow you may find it is unstable. In particular, it sometimesdoes not work well when your projects include parallel code (eg mclapply() calls). In that case, you can stillrun your analyses at the command line in a Docker container, even when that container is based on a full RStudioimage.

First build the image and get an image id as described above. Then run the container interactively with the following container on the docker host:

From within the container, clone the repository and cd into it. For example:

To knit the manuscript:

Docker Swarm Commands Cheat Sheet

Or, to run all the code at the R console (so you can dig into particular variables, for example):

Running Docker in the cloud

At Amazon Web Services

You can run a Docker container (a virtual container) on an amazon EC2 instance(a virtual computer). Why run your analyses in a virtual container on avirtual computer rather than directly on the virtual computer? Because it ismore portable. You can play with a docker container on your local computer,then launch an identical container on Amazon from the same image when you needto scale your analyses. No need to reconfigure and reinstall on a newenvironment.

This is a good resource for running Docker on AWS (and serves as the templatefor what I present here)-http://docs.aws.amazon.com/AmazonECS/latest/developerguide/docker-basics.html .

Create an EC2 instance running Amazon Linux. If you need anything more thanssh access, add a custom rule to open a port (eg 8787 for the RStudiotutorial below).

Login to the running EC2 instance using the url provided in the console andthe key you configured, eg:

Run the following on the EC2 instance:

Log out and then log back in. You can now run Docker within the running EC2instance, for example:

At Digital Ocean

Dockerfile Commands Cheat Sheet

Docker is even simpler to set up onDigital Ocean. Here are the basics:

- Sign into https://www.digitalocean.com

- Click Create a droplet

- Select One-click apps

- Select a Docker image

Docker’s purpose is to build and manage compute images and to launch them in a container. So, the most useful commands do and expose this information.

Here’s a cheat sheet on the top Docker commands to know and use.

(This is part of our Docker Guide. Use the right-hand menu to navigate.)

Images and containers

The docker command line interface follows this pattern:

docker <COMMAND>

The docker images and container commands grant access to the images and containers. From here, you are permitted to do something with them, hence:

There are:

- is lists the resources.

- cp copies files/folders between the container and the local file system.

- create creates new container.

- diff inspects changes to files or directories in a running container.

- logs fetches the logs of a container.

- pause pauses all processes within one or more containers.

- rename renames a container.

- run runs a new command in a container.

- start starts one or more stopped containers.

- stop stops one or more running containers.

- stats displays a livestream of containers resource usage statistics.

- top displays the running processes of a container.

View resources with ls

Docker Compose Commands Cheat Sheet

From the container ls command, the container id can be accessed (first column).

Control timing with start, stop, restart, prune

- start starts one or more stopped containers.

- stop stops one or more running containers.

- restart restarts one or more containers.

- prune (the best one!) removes all stopped containers.

Name a container

View vital information: Inspect, stats, top

- stats displays a live stream of container(s) resource usage statistics

- top displays the running processes of a container:

Dockerfile Commands

- inspect displays detailed information on one or more containers. With inspect, a JSON is returned detailing the name and states and more of a container.

Docker Commands The Ultimate Cheat Sheet

Additional resources

For more on this topic, there’s always the Docker documentation, the BMC DevOps Blog, and these articles: